What is TLS?

TLS is a cryptographic protocol that provides end-to-end security of data sent between applications over the Internet. It is mostly familiar to users through its use in secure web browsing, and in particular the padlock icon that appears in web browsers when a secure session is established. However, it can and indeed should also be used for other applications such as e-mail, file transfers, video/audioconferencing, instant messaging and voice-over-IP, as well as Internet services such as DNS and NTP.

TLS evolved from Secure Socket Layers (SSL) which was originally developed by Netscape Communications Corporation in 1994 to secure web sessions. SSL 1.0 was never publicly released, whilst SSL 2.0 was quickly replaced by SSL 3.0 on which TLS is based.

TLS was first specified in RFC 2246 in 1999 as an applications independent protocol, and whilst was not directly interoperable with SSL 3.0, offered a fallback mode if necessary. However, SSL 3.0 is now considered insecure and was deprecated by RFC 7568 in June 2015, with the recommendation that TLS 1.2 should be used. TLS 1.3 is also currently (as of December 2015) under development and will drop support for less secure algorithms.

It should be noted that TLS does not secure data on end systems. It simply ensures the secure delivery of data over the Internet, avoiding possible eavesdropping and/or alteration of the content.

TLS is normally implemented on top of TCP in order to encrypt Application Layer protocols such as HTTP, FTP, SMTP and IMAP, although it can also be implemented on UDP, DCCP and SCTP as well (e.g. for VPN and SIP-based application uses). This is known as Datagram Transport Layer Security (DTLS) and is specified in RFCs 6347, 5238 and 6083.

Why should I care about TLS?

Data has historically been transmitted unencrypted over the Internet, and where encryption was used, it was typically employed in a piecemeal fashion for sensitive information such as passwords or payment details. Whilst it was recognised back in 1996 (by RFC 1984) that the growth of the Internet would require private data to be protected, it has become increasingly apparent over the intervening period that the capabilities of eavesdroppers and attackers are greater and more pervasive than previously thought. The IAB therefore released a statement in November 2014 calling on protocol designers, developers, and operators to make encryption the norm for Internet traffic, which essentially means making it confidential by default.

Without TLS, sensitive information such as logins, credit card details and personal details can easily be gleaned by others, but also browsing habits, e-mail correspondence, online chats and conferencing calls can be monitored. By enabling client and server applications to support TLS, it ensures that data transmitted between them is encrypted with secure algorithms and not viewable by third parties.

Recent versions of all major web browsers currently support TLS, and it is increasingly common for web servers to support TLS by default. However, use of TLS for e-mail and certain other applications is still often not mandatory, and unlike with web browsers that provide visual clues, it is not always apparent to users whether their connections are encrypted.

It is therefore recommended that all clients and servers insist on mandatory usage of TLS in their communications, and preferably the most recent version TLS 1.2. For complete security, it is necessary to use it in conjunction with a publicly trusted X.509 Public Key Infrastructure (PKI) and preferably DNSSEC as well in order to authenticate that a system to which a connection is being made is indeed what it claims to be.

How does TLS work?

TLS uses a combination of symmetric and asymmetric cryptography, as this provides a good compromise between performance and security when transmitting data securely.

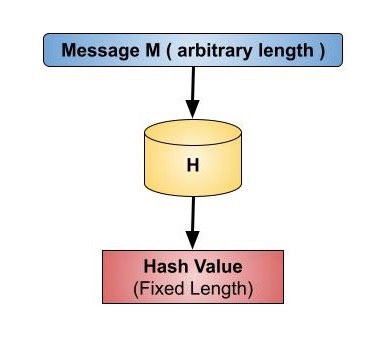

With symmetric cryptography, data is encrypted and decrypted with a secret key known to both sender and recipient; typically 128 but preferably 256 bits in length (anything less than 80 bits is now considered insecure). Symmetric cryptography is efficient in terms of computation, but having a common secret key means it needs to be shared in a secure manner.

Asymmetric cryptography uses key pairs – a public key, and a private key. The public key is mathematically related to the private key, but given sufficient key length, it is computationally impractical to derive the private key from the public key. This allows the public key of the recipient to be used by the sender to encrypt the data they wish to send to them, but that data can only be decrypted with the private key of the recipient.

The advantage of asymmetric cryptography is that the process of sharing encryption keys does not have to be secure, but the mathematical relationship between public and private keys means that much larger key sizes are required. The recommended minimum key length is 1024 bits, with 2048 bits preferred, but this is up to a thousand times more computationally intensive than symmetric keys of equivalent strength (e.g. a 2048-bit asymmetric key is approximately equivalent to a 112-bit symmetric key) and makes asymmetric encryption too slow for many purposes.

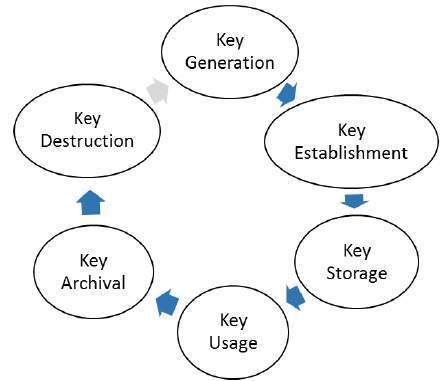

For this reason, TLS uses asymmetric cryptography for securely generating and exchanging a session key. The session key is then used for encrypting the data transmitted by one party, and for decrypting the data received at the other end. Once the session is over, the session key is discarded.

A variety of different key generation and exchange methods can be used, including RSA, Diffie-Hellman (DH), Ephemeral Diffie-Hellman (DHE), Elliptic Curve Diffie-Hellman (ECDH) and Ephemeral Elliptic Curve Diffie-Hellman (ECDHE). DHE and ECDHE also offer forward secrecy whereby a session key will not be compromised if one of the private keys is obtained in future, although weak random number generation and/or usage of a limited range of prime numbers has been postulated to allow the cracking of even 1024-bit DH keys given state-level computing resources. However, these may be considered implementation rather than protocol issues, and there are tools available to test for weaker cipher suites.

With TLS it is also desirable that a client connecting to a server is able to validate ownership of the server’s public key. This is normally undertaken using an X.509 digital certificate issued by a trusted third party known as a Certificate Authority (CA) which asserts the authenticity of the public key. In some cases, a server may use a self-signed certificate which needs to be explicitly trusted by the client (browsers should display a warning when an untrusted certificate is encountered), but this may be acceptable in private networks and/or where secure certificate distribution is possible. It is highly recommended though, to use certificates issued by publicly trusted CAs.

What is a CA?

A Certificate Authority (CA) is an entity that issues digital certificates conforming to the ITU-T’s X.509 standard for Public Key Infrastructures (PKIs). Digital certificates certify the public key of the owner of the certificate (known as the subject), and that the owner controls the domain being secured by the certificate. A CA therefore acts as a trusted third party that gives clients (known as relying parties) assurance they are connecting to a server operated by a validated entity.

End entity certificates are themselves validated through a chain-of-trust originating from a root certificate, otherwise known as the trust anchor. With asymmetric cryptography it is possible to use the private key of the root certificate to sign other certificates, which can then be validated using the public key of the root certificate and therefore inherit the trust of the issuing CA. In practice, end entity certificates are usually signed by one or more intermediate certificates (sometimes known as subordinate or sub-CAs) as this protects the root certificate in the event that an end entity certificate is incorrectly issued or compromised.

Root certificate trust is normally established through physical distribution of the root certificates in operating systems or browsers. The main certification programs are run by Microsoft (Windows & Windows Phone), Apple (OSX & iOS) and Mozilla (Firefox & Linux) and require CAs to conform to stringent technical requirements and complete a WebTrust, ETSI EN 319 411-3 (formerly TS 102 042) or ISO 21188:2006 audit in order to be included in their distributions. WebTrust is a programme developed by the American Institute of Certified Public Accountants and the Canadian Institute of Chartered Accountants, ETSI is the European Telecommunications Standards Institute, whilst ISO is the International Standards Organisation.

Root certificates distributed with major operating systems and browsers are said to be publicly or globally trusted and the technical and audit requirements essentially means the issuing CAs are multinational corporations or governments. There are currently around fifty publicly trusted CAs, although most/all have more than one root certificate, and most are also members of the CA/Browser Forum which develops industry guidelines for issuing and managing certificates.

It is however also possible to establish private CAs and establish trust through secure distribution and installation of root certificates on client systems. Examples include the RPKI CAs operated by the Regional Internet Registries (AfriNIC, APNIC, ARIN, LACNIC and RIPE NCC) that issue certificates to Local Internet Registries attesting to the IP addresses and AS numbers they hold; as well as the International Grid Trust Federation (IGTF) which provides a trust anchor for issuing server and client certificates used by machines in distributed scientific computing. In these cases, the root certificates can be securely downloaded and installed from sites using a certificate issued by a publicly trusted CA.